This paradigm shift in academia due to artificial intelligence (AI) is not just on the horizon—it’s already here, and its impact is nothing short of revolutionary.

That previous sentence was written by Chat GPT, a free online AI tool available to anyone with a device, email account and a source of internet.

Coastal Carolina University has its own policy regarding AI usage. It is located within the AI Central portion of CCU’s website. The University advises students to first look at their syllabus for the class, then look further into the assignment guidelines to try and confirm individual policies with the instructor.

Some students either use AI in the classroom themselves, or know students who do. Kiley Sweeney, a sophomore intelligence and national security studies major shared her knowledge of her peers’ AI usage.

“I don’t know anyone who uses AI for daily use,” Sweeney said. “The people I know who use it is for assignments for school.”

The University states students using AI are not permitted to submit any personal information or information relating to CCU. This is because information submitted to AI platforms and services is accessible by others.

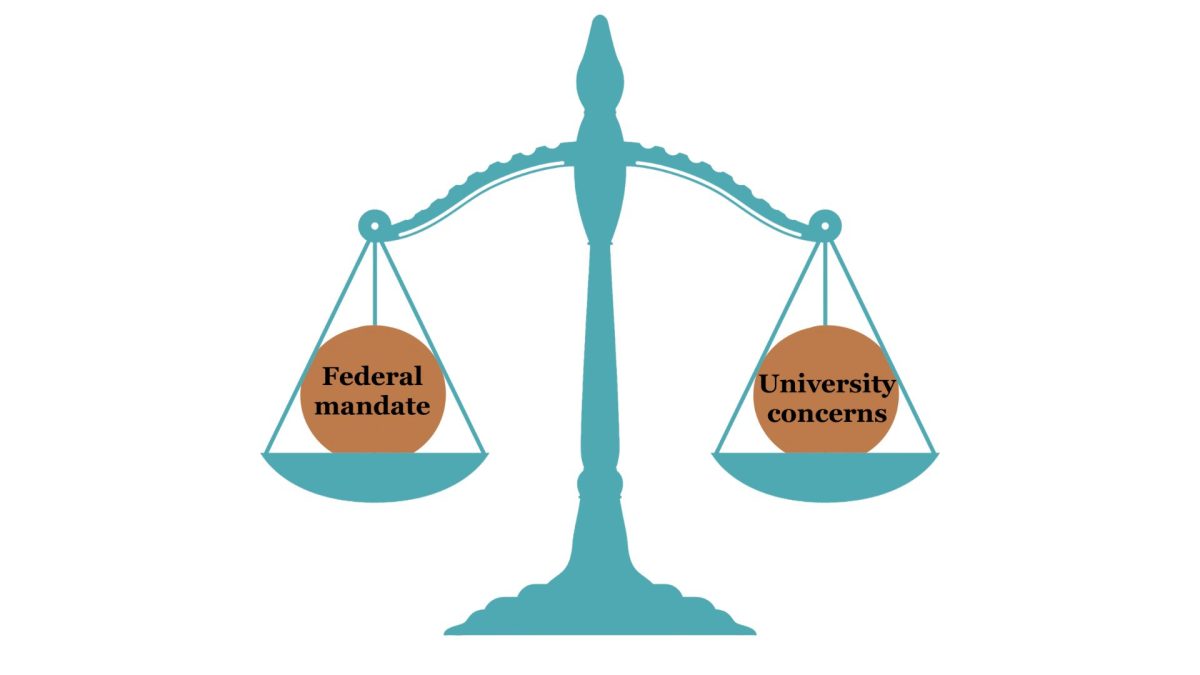

Coastal Carolina is constantly trying to keep up with the fast-paced nature of AI and its improvements. To combat that issue, the University’s AI policy committee holds meetings every two weeks. This committee is responsible for building a website for CCU which shows the updated policies and guidelines for AI usage in school.

Interim Provost Sara Hottinger has been the one calling meetings every two weeks and taking the proactive approach towards AI regulation at CCU. Wes Fondren, associate professor and member of CCU’s AI policy committee, attributes the success and speed of the committee to Hottinger’s efforts as she calls meetings and stays “on top” of the policy.

Fondren has been able to advocate the benefits of AI to faculty members, administrators and CCU President Michael Benson.

“This is the approach the University has taken taking, training, support, citation, those sorts of things rather than prevention,” Fondren said.

Fondren also thinks AI is a tool which will help students when they are taught how to properly use it and even incorporates AI into some of his assignments in his courses.

“Well, because it’s so good at brainstorming, I want the students to see that,” Fondren said.

For example, an assignment for students was to to use AI to brainstorm solutions for problems based on communication theories, and then choose one of those solutions and explain why it was the best out of all the options the AI came up with. Fondren said this assignment was similar to a pitch from the show “Shark Tank.”

“They evaluate learning at a high level, and I get to watch really interesting videos instead of papers,” Fondren said.

Rather than creating policies for CCU as a whole, individual professors determine if the use of AI is allowed in their classrooms.

Emma Howes, an associate English professor, does not incorporate AI usage into her classroom, but she does support other professors and their students using AI.

Howes said she believes AI should be used as a tool in classrooms, not as a crutch.

“I do think that it has a tremendous potential to serve as a tool for students as they’re thinking about learning to critique digital literacy,” Howes said. “It’s really something we’ll be seeing more and more of over the next, I don’t know, few years, decades or whatever it may be, in terms of the writing that we encounter online or you know, in other spaces.”

She views AI as a form of expression and an outlet for students. AI should speak through the students, Howes said, not the other way around.

Since Fondren’s students are encouraged to and sometimes required to use AI, there are no punishments for using AI in his classroom. However, not all professors are similar in this regard. Fondren sees AI as a very useful tool in certain fields of discipline, but said the decision to allow AI in the classroom should ultimately be up to the professor.

Howes has a positive outlook on AI and its future, but students are not allowed to have it write assignments for them.

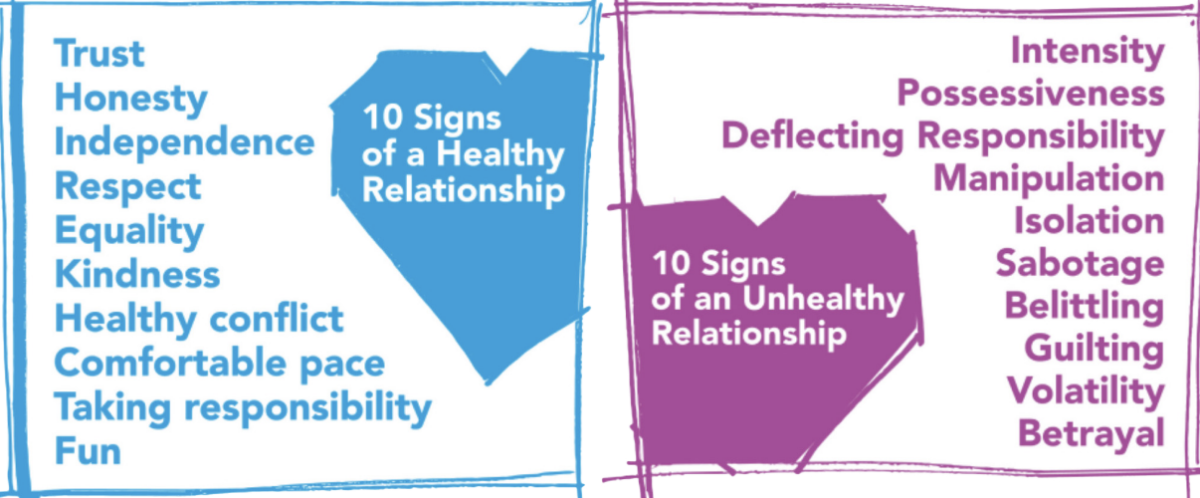

“In my particular classes, we are not using it. So, the unauthorized use of it would be treated like any other plagiarism case,” she said.

Howes finds it somewhat easy to tell if a student is using AI, such as Chat GPT, since the language isn’t as smooth as AI software that is paid for.

Plagiarism is something that has become an issue with newly emerging AI platforms. The University tries to combat this by adding citation guides onto the website for AI usage.

Micah Swift, a freshman psychology major, voiced his concerns for AI usage and the plagiarism factor, specifically for AI art generators.

“I don’t like it because it takes art that has been made by humans, and then rebrands it as its own without crediting any of the art that it took, artstyle or any artists,” Swift said.

Swift believes that AI can be a reliable tool when used correctly, but it could be used poorly when it’s used to cheat or for other questionable purposes. He has encountered professors which allowed and suggested using AI, but chose not to take the opportunity.

AI is something that Howes believes will be used heavily in the future and in many different ways.

“I imagine that many of us will find ways eventually to incorporate it, you know, and to incorporate it from a critical lens right to incorporate it in a way that kind of goes back to that idea of being appropriated by versus of reading a discourse,” Howes said.

Another assignment Fondren had given his students was to grade a paper written by AI. The students needed to locate the mistakes that the AI had made, and lost points when the mistakes were not caught. He gave this assignment to show his students a real world application of how AI can be used in the job field.

“What I was trying to teach them was this is what you’re going to probably be doing once you leave the beautiful campus of Coastal Carolina and go in the job market,” Fondren said.